Content moderation on social media sites remains a hotly-contested topic.

This year, congressional committees have held not one, but two hearings on the "filtering practices" of social media networks. And while some of these lawmakers begged the question, "Are networks suppressing content from one stream of thought or another?" -- these days, there's another big question in the ether.

Are social media companies responsible for the content published on their networks -- especially when that content is factually incorrect?

65% of People Think Social Media Sites Should Remove This Content

The Current Climate

The above question arose at a recent hearing on foreign influence on social media platforms, where Senator Ron Wyden broached the topic of Section 230: a Provision of the 1996 Communication Decency Act that, as the Electronic Frontier Foundation describes it, shields web hosts from "legal claims arising from hosting information written by third parties."

But those protections are speculated -- including by Wyden himself -- to be out-of-date, considering the evolution of content distribution channels online, and both the volume and nature of the content being shared on them.

Ron Wyden: "I just want to be clear, as the author of section 230, the days when these [platforms] are considered neutral are over." cc: all of Silicon Valley

— David McCabe (@dmccabe) August 1, 2018

That includes content pertaining to conspiracy theories, or that is otherwise factually incorrect.

The former has been top-of-mind for many in recent weeks, with the removal of accounts belonging to Alex Jones -- a media host and conspiracy theorist who attempts to frame mass shootings and other tragedies as hoaxes -- from Facebook, Apple, and YouTube.

But what is the public opinion on the matter -- and to what extent do online audiences believe social media platforms are responsible for the presence of this content on their sites?

The Data

The Content Itself

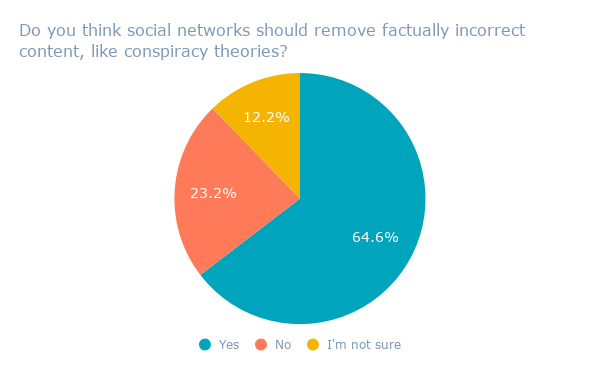

We asked 646 internet users across the U.S., UK, and Canada: Do you think social networks should remove factually incorrect content, like conspiracy theories?

On average, 65% of respondents said yes, with the highest segment (67%) based in the UK.

.png?t=1533748356595&width=600&name=Responses%20by%20Region%20(4).png)

Data collected with Lucid

The Accounts and Users Sharing It

Then, we wanted to know how people felt about the moderation of the publishers of that content: the accounts and users distributing it or sharing it on social media.

We asked 647 internet users across the U.S., UK, and Canada: Do you think social networks should remove users or accounts that post factually incorrect content, like conspiracy theories?

On average, 65% of respondents said yes, with the highest segment (68%) based in the U.S.

.png?t=1533748356595&width=600&name=Do%20you%20think%20social%20networks%20should%20remove%20users%20or%20accounts%20that%20post%20factually%20incorrect%20content,%20like%20conspiracy%20theories_%20(1).png)

.png?t=1533748356595&width=600&name=Responses%20by%20Region%20(5).png)

Data collected with Lucid

The Responses in Context

Conflicting Standards

Many point to a lack of transparency around the practice of content moderation as a major cause of certain networks' inability to more quickly remove information and accounts of this nature.

As @sheeraf writes on Facebook, the problem is transparency. They don't and won't say how many strikes someone like Alex Jones gets before a post or account is taken down. How is anyone left to believe there isn't editorial judgment here?

— CeciliaKang (@ceciliakang) August 8, 2018

In an interview with Recode's Kara Swisher, Facebook CEO Mark Zuckerberg offered very little in terms of a tangible explanation of how the network decides what -- and whom -- is allowed to publish or be published on its site.

"As abhorrent as some of those examples are," he said at the time, "I just don’t think that it is the right thing to say, 'We’re going to take someone off the platform if they get things wrong, even multiple times.'"

After Facebook later removed several Pages belonging to Jones, the company published a vague explanation of its criteria for removing these Pages.

As company executives have explained in the past, Pages and their admins receive a "strike" on every occasion that they publish content in violation of the network's Community Standards. And once a certain number of strikes are received, the Page is unpublished entirely.

What Facebook will not say, however, is the strike threshold that must be reached before a page is unpublished. It remains mum, the statement says, because "we don’t want people to game the system, so we do not share the specific number of strikes that leads to a temporary block or permanent suspension."

But that statement could suggest that, since the system is even able to be gamed, it's possible that different Pages are given different thresholds, or that some more easily receive strikes than others.

The objectivity of content moderation remains a challenge. And despite Facebook's publication of its Community Standards for public consumption, certain reports -- like an undercover investigation from Channel 4 -- indicate that content moderators are often given instructions that conflict with those very standards.

Dispatches reveals the racist meme that Facebook moderators used as an example of acceptable content to leave on their platform.

— Channel 4 Dispatches (@C4Dispatches) July 17, 2018

Facebook have removed the content since Channel 4’s revelations.

Warning: distressing content. pic.twitter.com/riVka6LcPS

The Moderation Onus

There appears to be widespread phenomenon of social media networks downplaying their respective levels of responsibility, in terms of moderating this type of content.

While Facebook, Apple, and YouTube actively removed content from Jones and Infowars -- which is said by some to be far from a sustainable solution -- Twitter has allowed this content to remain on the platform, claiming that it's not in violation of the network's rules.

Accounts like Jones' can often sensationalize issues and spread unsubstantiated rumors, so it’s critical journalists document, validate, and refute such information directly so people can form their own opinions. This is what serves the public conversation best.

— jack (@jack) August 8, 2018

Twitter CEO Dorsey went so far as to place that responsibility not on the network, but on journalists, who he said should "document, validate, and refute" claims made by parties like Jones -- which has actually been done repeatedly.

The timing of Dorsey's statement is particularly curious, given the company's recent selection of proposals to study its conversational and network health.

The inconsistent response by various platforms to content from and accounts belonging to Jones and Infowars point to flaws in the development and enforcement of community standards and rules. Where one network won't reveal how many strikes until "you're out," another says targeted harassment isn't tolerated on its site -- and yet, dismisses many reports of it as non-violating.

Hey @jack did I do this right pic.twitter.com/OafLJsO12F

— Caroline Moss (@socarolinesays) August 8, 2018

"The differing approaches to Mr. Jones exposed how unevenly tech companies enforce their rules on hate speech and offensive content," writes The New York Times. "When left to make their own decisions, the tech companies often struggle with their roles as the arbiters of speech and leave false information, upset users and confusing decisions in their wake."

No comments:

Post a Comment